MPI

2021-07-17

1 I/O

1.1 Basics

The basic things are trivial, including

mpi_file_open, mpi_file_close. Normally, when the file is

opened, we write data into the file using mpi_file_write or

mpi_file_write_at. (mpi_file_write_all or

mpi_file_write_at_all are the collective version.)

Then if we want to write data again, we need to reconfigure the file

pointer or the explicit offset. For the explicit offset, we calculate it

by counting the number of data elements already written. For the file

pointer, we could also explicitly calculate it by counting or use the

procedures mpi_file_get_position and

mpi_file_get_byte_offset combined with explicitly

calculating the starting location of the next write operation.

mpi_file_get_position gets the current position of the

individual file pointer in units of etype. The result is

offset. Then we provide offset to

mpi_file_get_byte_offset to convert a view relative

offset (in units of etype) into a displacement in bytes

from the beginning of the file. The result is disp.

Next we could use mpi_file_set_view to change the file

view of the process. Then we could use mpi_file_write_all

for the parallel writing operation. Though

mpi_file_write_all is a blocking function. Note, currently

I am not able to figure out how to use mpi_file_write_at

when the previous write is done by mpi_file_set_view and

mpi_file_write.

1.2 File View

A file view is a triplet of arguments

(displacement, etype, filetype) that is passed to

MPI_File_set_view.

displacement= number of bytes to be skipped from the start of the fileetype= unit of data access (can be any basic or derived datatype)filetype= specifies layout of etypes within file

The file view sets the starting location to write by specifying

displacement. The displacement is measured

from the head of the file.

References

1.3 Example

program io

implicit none

continue

!

call writeBinFile(2)

!

contains

!

subroutine writeBinFile(n_write)

use mpi

integer, intent(in) :: n_write

integer ierr, i, myrank, nrank, BUFSIZE, BYTESIZE, thefile

parameter (BUFSIZE=10, BYTESIZE=BUFSIZE*4)

integer buf(BUFSIZE)

integer(kind=MPI_OFFSET_KIND) disp

continue

call MPI_INIT(ierr)

call MPI_COMM_RANK(MPI_COMM_WORLD, myrank, ierr)

call MPI_COMM_SIZE(MPI_COMM_WORLD, nrank, ierr)

do i = 0, BUFSIZE

buf(i) = myrank * BUFSIZE + myrank

end do

call MPI_FILE_OPEN(MPI_COMM_WORLD, 'mpi_data.bin', &

MPI_MODE_WRONLY + MPI_MODE_CREATE, &

MPI_INFO_NULL, thefile, ierr)

disp = myrank * BYTESIZE

! 1. Individual file poiner

! call MPI_FILE_SET_VIEW(thefile, disp, MPI_INTEGER, &

! MPI_INTEGER, 'native', &

! MPI_INFO_NULL, ierr)

! call MPI_FILE_WRITE_ALL(thefile, buf, BUFSIZE, MPI_INTEGER, &

! MPI_STATUS_IGNORE, ierr)

! 2. Explicit offset

call MPI_FILE_WRITE_AT_ALL(thefile, disp, buf, BUFSIZE, MPI_INTEGER, &

MPI_STATUS_IGNORE, ierr)

if ( n_write > 1 ) then

!

disp = nrank * BYTESIZE + myrank * BYTESIZE

! 1. Individual file poiner

! call MPI_FILE_SET_VIEW(thefile, disp, MPI_INTEGER, &

! MPI_INTEGER, 'native', &

! MPI_INFO_NULL, ierr)

! call MPI_FILE_WRITE_ALL(thefile, buf, BUFSIZE, MPI_INTEGER, &

! MPI_STATUS_IGNORE, ierr)

! 2. Explicit offset

call MPI_FILE_WRITE_AT_ALL(thefile, disp, buf, BUFSIZE, MPI_INTEGER, &

MPI_STATUS_IGNORE, ierr)

!

end if

!

call MPI_FILE_CLOSE(thefile, ierr)

call MPI_FINALIZE(ierr)

end subroutine writeBinFile

!

end programTo write to a file multiple times, we should notice that the starting

location of the write operation must be set again after a write

opeartion. As the above example shows, 2 simple plans for writing twice.

1) 2 times of (MPI_FILE_SET_VIEW +

MPI_FILE_WRITE_ALL) 2) 2 times of

(MPI_FILE_WRITE_AT_ALL). In both plans,

displacement is measured from the head of the file for both

write opeartions. Another hybrid plan is

(MPI_FILE_WRITE_AT_ALL) + (MPI_FILE_SET_VIEW +

MPI_FILE_WRITE_ALL) using the absolute

displacement measured from the head of the file for both

write opeartions. The last hybrid plan is

(MPI_FILE_SET_VIEW + MPI_FILE_WRITE_ALL) +

(MPI_FILE_WRITE_AT_ALL). But it seems like the file view

messes up the displacement for MPI_FILE_WRITE_AT_ALL. I am

not able to figure out how to correctly implement this hybrid plan.

After one write operation, the explicit setting

displacement could be replaced by the procedures as

follows,

disp = nrank * BYTESIZE + myrank * BYTESIZE

! the following lines give the same disp as the above line

call MPI_FILE_GET_POSITION(thefile,offset,ierr)

call MPI_FILE_GET_BYTE_OFFSET(thefile,offset,disp,ierr)

disp = disp + (nrank-1)*BYTESIZESince one write operation takes BYTESIZE, it needs to

move the file pointer forward by (nrank-1)*BYTESIZE.

2 Derived Datatype

2.1 Indexed Datatype

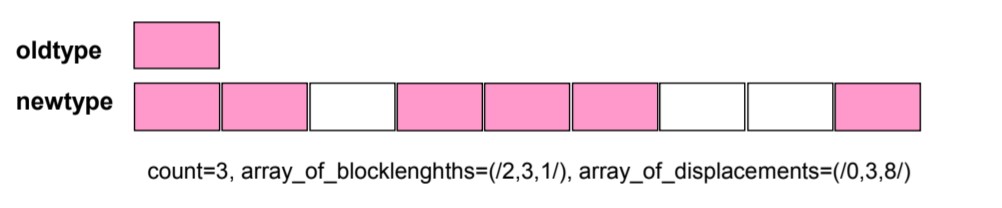

The indexed datatype basically includes block offset and block length. It includes multiple sections of an array to make up of a new element of the newly created datatype.

For reference, check P14 in the following attached MPI DataTypes PDF.

3 Tips

3.1 Run On Multi Nodes

In Taicang cluster (太仓集群), we have to use the following commands to run on multi nodes,

mpirun -n 4 -hosts node2,node3 -perhost 2 -env I_MPI_FABRICS tcp ./test3.2 OpenMPI hangs when

When the cluster has virtual NIC in addition to the real NIC, OpenMPI

hangs with the default parameter. The reason is that OpenMPI uses tcp to

connect to the wrong NIC, i.e. virbr0. The Taicang cluster

has the real NIC enp97s0f1 and virbr0. The

correct command of running OpenMPI across multiple nodes is

mpirun -x LD_LIBRARY_PATH -n 2 -hostfile machine --mca btl_tcp_if_include enp97s0f1 ~/NFS_Project/NFS/relwithdebinfo_gnu/bin/nfs_opt_g nfs.jsonThe content of the machine file for running 2 procs is

node3 slots=1

node4 slots=1Reference: 7. How do I tell Open MPI which IP interfaces / networks to use?

3.3 OpenMPI pass environment variable to remote nodes

Running NFR code on the remote nodes requires the complete set up of

environment variables. NFR compiled by OpenMPI-4 and gfortran-10.2

requires the loading of libgfortran.so.5. Incomplete set up

of the environment variables results in

error while loading shared libraries: libgfortran.so.5. The

correct command is

mpirun -x LD_LIBRARY_PATH -n 2 -hostfile machine --mca btl_tcp_if_include enp97s0f1 ~/NFS_Project/NFS/relwithdebinfo_gnu/bin/nfs_opt_g nfs.json